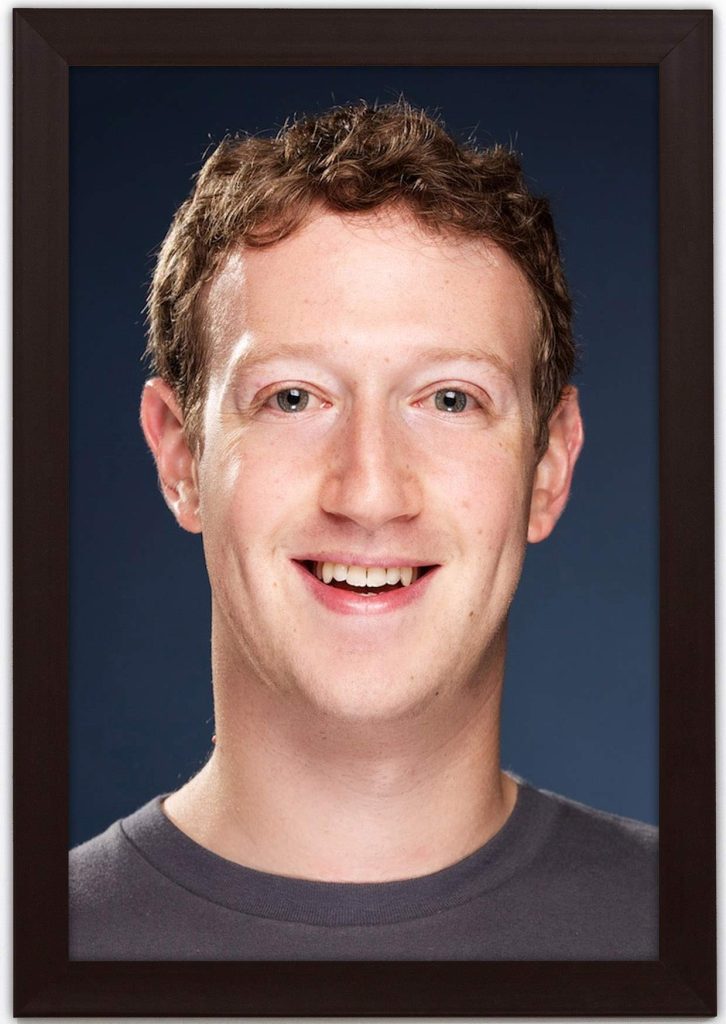

In 2026, the tech world was rocked by a serious AI safety case involving Mark Zuckerberg, the CEO of Meta. The company, which has been a trailblazer in the fields of social media and virtual reality, found itself at the center of a storm, accentuating the critical need for robust AI governance.

The case emerged after incidents involving Meta’s AI-driven platforms raised concerns regarding user privacy, misinformation, and algorithmic bias. While Zuckerberg has long championed the transformative potential of artificial intelligence, critics highlighted that the rapid deployment of these technologies lacked adequate safety measures.

At the heart of the controversy was Meta’s advanced AI system, used for content moderation across its platforms. Reports surfaced alleging that the AI was not only failing to accurately filter out harmful content but also inadvertently amplifying misinformation during vital political events. The legal and ethical ramifications of these failures prompted a cascade of lawsuits and regulatory scrutiny.

Regulators globally viewed the situation as a pivotal moment for AI safety standards. As AI systems became increasingly integrated into everyday life, the demands for transparency and accountability intensified. Zuckerberg faced not only public backlash but also pressures from governments aiming to impose stricter regulations on tech companies. Animal rights activists and privacy advocates were particularly vocal, accusing Meta of prioritizing innovation over ethical considerations.

In response to the mounting criticism, Zuckerberg took steps to address the situation. He announced a comprehensive review of Meta’s AI practices and committed to enhancing safety protocols. The company began investing in interdisciplinary teams, combining expertise in AI technology, ethics, and social sciences to create more responsible AI systems. This move was seen as a necessary step toward rebuilding public trust.

Additionally, Zuckerberg initiated collaborations with external experts and advocacy groups focused on AI safety. This transparency-driven approach aimed to ensure that Meta’s AI systems would not only function effectively but also align with societal values. Legal scholars noted that the outcome of the case could have far-reaching implications for tech regulation, setting precedents in accountability that other companies would inevitably follow.

The serious AI safety case against Zuckerberg underscored the urgency of addressing ethical concerns in technological advancement. As Meta navigated this challenging period, it became a focal point in discussions about the balance between innovation and responsibility. The year 2026 ultimately served as a stark reminder of the complexities surrounding AI, highlighting the ongoing struggle between progress and ethical constraints in an increasingly digital world.

For more details and the full reference, visit the source link below: